.png)

As decentralized AI becomes a more critical part of the blockchain ecosystem, developers are increasingly seeking ways to make AI operations transparent, verifiable, and reward-driven. In our recent live workshop, Pavel and Thiru from the LazAI team presented a deep dive into building onchain AI agents using the LazAI frameworks, focusing on data contribution, job orchestration, and secure inference execution.

This blog post summarizes the core concepts, workflows, and tools covered during the session. If you prefer video format then check out the YouTube live recording here.

Traditional AI systems are often opaque. As users, we rarely know if the model used the right data, whether the inference process was tampered with, or if results can be trusted, especially in high-stakes financial operations. LazAI addresses this trust gap by creating a verifiable AI marketplace that cryptographically registers data, distributes jobs, and confirms results through a decentralized settlement layer.

LazAI’s goal is to create a full-stack solution where data submission, processing, and outcome validation all happen onchain.

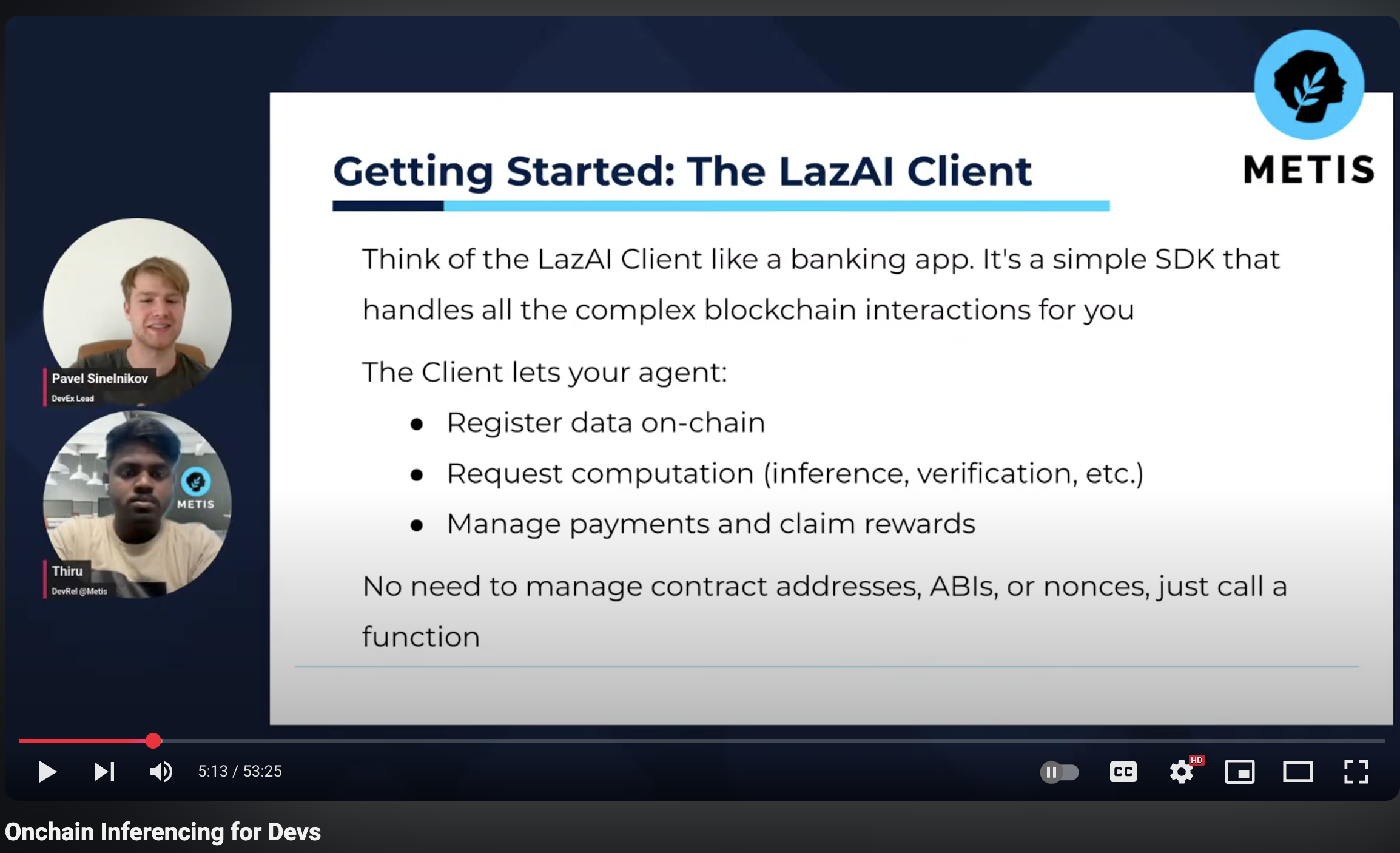

At the heart of this architecture lies Alith, a client-side framework for building AI agents. Underpinning Alith is the LazAI Client, which handles all onchain interactions, including job execution and settlement, on the Hyperion blockchain.

The lifecycle of a typical onchain AI task includes:

The session featured multiple examples, particularly using Python and Node.js. A few highlights:

Registering Data

const file = await client.addFile("encrypted_file.json");

const proofRequest = await client.requestProof(file.fileId);

Running Inference

const inferenceUrl = await client.getNodeUrl(nodeAddress);

const result = await axios.post(`${inferenceUrl}/v1/infer`, {

fileId: "74",

prompt: "Translate to French"

});

Verifying Data onchain

The smart contract stores the file hash and verifies existence through proofs generated by nodes.

Each contract (Data Registry, AI Process, Settlement) has specific functions to ensure modularity and traceability.

LazAI offers SDKs in:

The team maintains open repositories on GitHub under the 0xLazAI organization. Developers can access documentation, boilerplate code, and examples for integrating with the network.

As part of the HyperHack, participants are encouraged to build architectural diagrams and implementations using the LazAI system. The focus should be on conceptual integration rather than complete technical deployment. Projects can include:

The LazAI framework represents a step forward in making AI computation trustless, decentralized, and fairly incentivized. By leveraging cryptographic proofs, tokenized data ownership, and modular smart contracts, developers can now build AI agents that are not only powerful but also transparent and verifiable.

Watch the Full Workshop:

Onchain AI Inferencing Workshop on YouTube